House Price Prediction

Abstract:

The House Price Index (HPI) is a

popular tool for estimating changes in housing prices. Because housing prices

are strongly correlated with other factors like location, area, and population,

predicting individual housing prices requires information other than the HPI.

There have been a significant number of papers using conventional methods of

machine learning to correctly predict housing prices, however they rarely

concern themselves with the performance of specific models and ignore the less

common and still complex models. As a consequence, in order to investigate the

differences between several advanced models, this paper will use both

traditional and modern machine learning strategies to look into the various

effects of features on prediction techniques.

Short introduction:

We decided to enter Kaggle's

advanced regression methods competition, taking you along for the ride. If

you're new to machine learning and would like to see a project from start to

finish, try sticking around. We'll go over the steps we've taken while also

attempting to deliver a basic course in machine learning.

Objective:

The goal of the competition is to

forecast home sales prices in Timisoara. A training and evaluating data

set in csv format, along with a data dictionary, are provided.

Training: We have many examples of

houses with many features that describe each facet of the house through our

training data. Each house's sale price (label) is given to us. The training

data will be used to "teach" our models.

Testing: The sample data set

contains the same number of capabilities as the training data. Because we are

attempting to predict the sale price, we exclude it from our test data set. After

we have constructed our models, we would then run the best one on the test data

and publish it to the Kaggle leaderboard.

Task:

Machine learning tasks are typically

classified into three types: supervised, unsupervised, as well as

reinforcement. Our task for this competition would be supervised learning.

the type of machine learning

task presented to you is easy to identify based on the data you have and your

goal. We've been provided housing data with features and labels, and we're

supposed to predict the labels for houses that aren't in our training set.

Tools:

For this project, we used

Python as well as Jupyter notebooks. Jupyter notebooks are popular among data

scientists because they are simple to use and demonstrate your work steps.

In general, most machine

learning projects follow the same procedure. Data ingestion, data cleaning,

exploratory data analysis, feature engineering, and finally machine learning

are all steps in the process. Because the pipeline is not linear, you may have

to switch back and forth between stages. It's important to note this because

tutorials frequently lead you to believe that the procedure is much cleaner

than it actually is. Bear this in mind because your first machine learning

project may be a disaster.

Data cleaning:

First we make the difference between

null values as missing values or as a meaning. Using the mode to fill the

categorical variable

Impute using a constant value and Impute

using the column mode:

we will start cleaning the numerical

data by filling missing values, using knn amputation

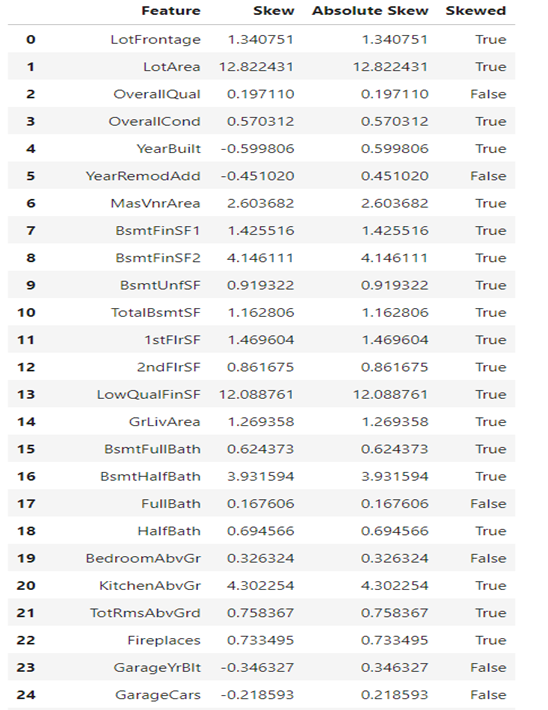

take column names of the numeric

features then see skew for each column and log transform for skewed features

Output:

Exploratory Data Analysis (EDA):

This is frequently where our data

visualization journey begins. EDA throughout machine learning is used to

investigate the quality of our data. Labels: I used a histogram to plot the

sales price. The allocation of sale prices is skewed, which is to be expected.

It is not uncommon to see a few reasonably priced houses in ones neighborhood.

Input:

Output:

Conclusion:

A.I it's the most effective way to improve and predict costs with high

precision. The data is from Mumbai and the method is the decision tree. The

Decision tree regressor gives an accuracy of 89%.

The house price prediction helps sellers (that own a property or build one)

and buyers to put a fair price on the house. The variables that change houses

prices can be: number of rooms, age of the property, postal region and so on.

In this paper, 2 more variables are added: air quality and noise pollution.

The system design and architecture of this article contains: collecting the

data from different websites, data processing for cleaning the file, having two

modules: training set and test set, testing and integrating with UI in the end.

Between Multiple straight backslide,

Decision Tree Regressor, and KNN, the Decision Tree Regressor fitted their

dataset the best. The decision tree regressor recognizes quality components and

trains a model like a tree to forecast data in the future to provide a massive

result. Right after building the model and giving the result, the accompanying

stage is to do the consolidation with the UI

The implementation consists in data

processing, factual representation of dataset, visualization by using

Matplotlib and fitting the model by using the regressor.

In the end, they displayed the

design of anticipated versus genuine costs with the precision of expectation.

the Decision tree AI estimate is

utilized in this work to develop an assumption model for predicting implicit

selling costs for any land property. Fresh parameters like air quality and

wrongdoing rate were linked to the dataset to aid in predicting expenses even

more accurately.

https://kalaharijournals.com/resources/APRIL_15.pdf

References

[1] Burkov, A (2019). The Hundred

Page Machine Learning Book, pp.84–85